How on-device machine learning has changed the way we use our phones

Smartphone chipsets have come a long way since the early days of Android. While the vast majority of budget phones were woefully underpowered only a few years ago, today’s mid-range smartphones perform just as well as one or two-year-old flagships.

Now that the average smartphone is more than capable of handling general everyday tasks, both chipmakers and developers have set their sights on loftier goals. With this perspective then, it’s clear why ancillary technologies like artificial intelligence and machine learning (ML) are now taking center stage instead. But what does on-device machine learning mean, especially for end-users like you and me?

In the past, machine learning tasks required data to be sent to the cloud for processing. This approach has many downsides, ranging from slow response times to privacy concerns and bandwidth limitations. However, modern smartphones can generate predictions completely offline thanks to advancements in chipset design and ML research.

To understand the implications of this breakthrough, let’s explore how machine learning has changed the way we use our smartphones every day.

The birth of on-device machine learning: Improved photography and text predictions

The mid-2010s saw an industry-wide race to improve camera image quality year-over-year. This, in turn, proved to be a key stimulus for machine learning adoption. Manufacturers realized that the technology could help close the gap between smartphones and dedicated cameras, even if the former had inferior hardware to boot.

To that end, almost every major tech company began improving their chips’ efficiency at machine learning-related tasks. By 2017, Qualcomm, Google, Apple, and Huawei had all released SoCs or smartphones with machine learning-dedicated accelerators. In the years since, smartphone cameras have improved wholesale, particularly in terms of dynamic range, noise reduction, and low-light photography.

More recently, manufacturers such as Samsung and Xiaomi have found more novel use-cases for the technology. The former’s Single Take feature, for instance, uses machine learning to automatically create a high-quality album from a single 15 second-long video clip. Xiaomi’s use of the technology, meanwhile, has progressed from merely detecting objects in the camera app to replacing the entire sky if you desire.

By 2017, almost every major tech company began improving their chips’ efficiency at machine learning-related tasks.

Many Android OEMs now also use on-device machine learning to automatically tag faces and objects in your smartphone’s gallery. This is a feature that was previously only offered by cloud-based services such as Google Photos.

Of course, machine learning on smartphones reaches far beyond photography alone. It’s safe to say that text-related applications have been around for just as long, if not longer.

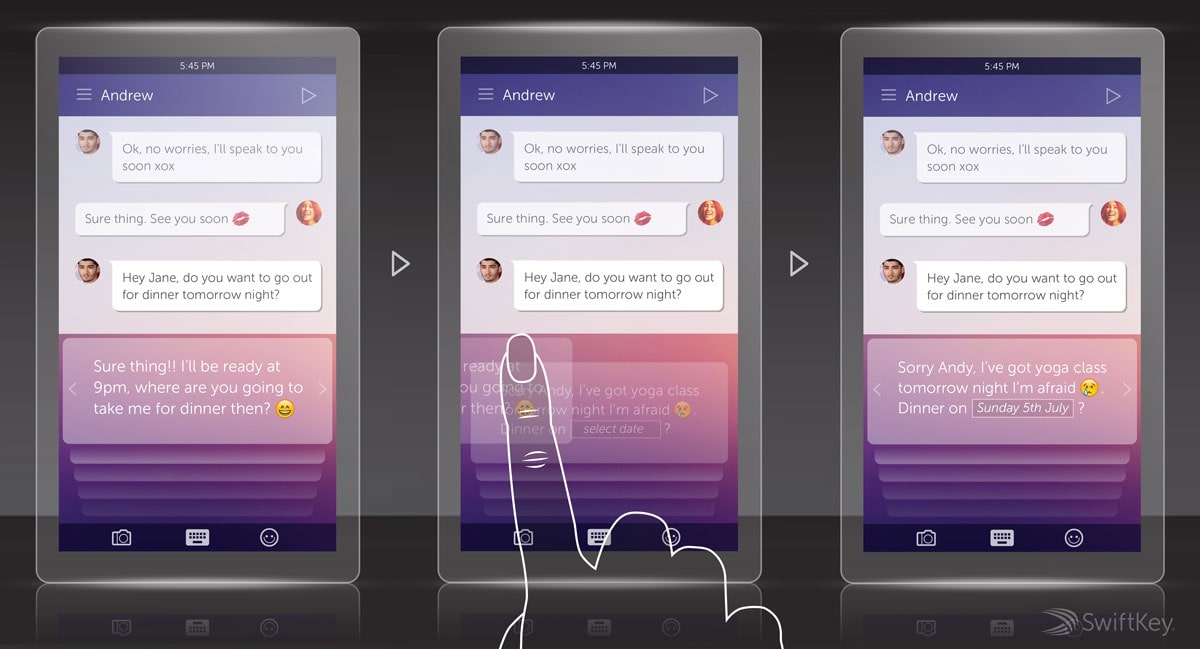

Swiftkey was perhaps the first to use a neural network for better keyboard predictions all the way back in 2015. The company claimed that it had trained its model on millions of sentences to understand the relationship between various words better.

Another hallmark feature came a couple of years later when Android Wear 2.0 (now Wear OS) gained the ability to predict relevant replies for incoming chat messages. Google later dubbed the feature Smart Reply and brought it to the mainstream with Android 10. You most likely take this feature for granted every time you reply to a message from your phone’s notification shade.

Voice and AR: Tougher nuts to crack

While on-device machine learning has matured in text prediction and photography, voice recognition and computer vision are two fields that are still witnessing significant and impressive improvements every few months.

Take Google’s instant camera translation feature, for example, which overlays a real-time translation of foreign text right in your live camera feed. Even though the results are not as accurate as their online equivalent, the feature is more than usable for travelers with a limited data plan.

High-fidelity body tracking is another futuristic-sounding AR feature that can be achieved with performant on-device machine learning. Imagine the LG G8’s Air Motion gestures, but infinitely smarter and for larger applications such as workout tracking and sign language interpretation instead.

More on Google Assistant: 5 tips and tricks you might not know about

Coming to speech, voice recognition and dictation have both been around for well over a decade at this point. However, it wasn’t until 2019 that smartphones could do them completely offline. For a quick demo of this, check out Google’s Recorder application, which leverages on-device machine learning tech to transcribe speech in real-time automatically. The transcription is stored as editable text and can be searched as well — a boon for journalists and students.

The same technology also powers Live Caption, an Android 10 (and later) feature that automatically generates closed captions for any media playing on your phone. In addition to serving as an accessibility function, it can come in handy if you’re trying to decipher the contents of an audio clip in a noisy environment.

While these are certainly exciting features in their own right, there are also several ways they can evolve in the future. Improved speech recognition, for instance, could enable faster interactions with virtual assistants, even for those with atypical accents. While Google’s Assistant has the ability to process voice commands on-device, this functionality is sadly exclusive to the Pixel lineup. Still, it offers a glimpse into the future of this technology.

Personalization: The next frontier for on-device machine learning?

Today’s vast majority of machine learning applications rely on pre-trained models, which are generated ahead of time on powerful hardware. Inferring solutions from such a pre-trained model — such as generating a contextual Smart Reply on Android — only takes a few milliseconds.

Right now, a single model is trained by the developer and distributed to all phones that require it. This one-size-fits-all approach, however, fails to account for each user’s preferences. It also cannot be fed with new data collected over time. As a result, most models are relatively static, receiving updates only now and then.

Solving these problems requires the model training process to be shifted from the cloud to individual smartphones — a tall feat given the performance disparity between the two platforms. Nevertheless, doing so would enable a keyboard app, for instance, to tailor its predictions specifically to your typing style. Going one step further, it could even take other contextual clues into account, such as your relationships with other people during a conversation.

Currently, Google’s Gboard uses a mixture of on-device and cloud-based training (called federated learning) to improve the quality of predictions for all users. However, this hybrid approach has its limitations. For example, Gboard predicts your next likely word rather than entire sentences based on your individual habits and past conversations.

This kind of individualized training absolutely needs to be done on-device since the privacy implications of sending sensitive user data (like keystrokes) to the cloud would be disastrous. Apple even acknowledged this when it announced CoreML 3 in 2019, which allowed developers to re-train existing models with new data for the first time. Even then, though, the bulk of the model needs to be initially trained on powerful hardware.

On Android, this kind of iterative model re-training is best represented by the adaptive brightness feature. Since Android Pie, Google has used machine learning to, “observe the interactions that a user makes with the screen brightness slider,” and re-train a model tailored to each individual’s preferences.

On-device training will continue to evolve in new and exciting ways.

With this feature enabled, Google claimed a noticeable improvement in Android’s ability to predict the right screen brightness within only a week of normal smartphone interaction. I didn’t realize how well this feature worked until I migrated from a Galaxy Note 8 with adaptive brightness to the newer LG Wing that bafflingly only includes the older “auto” brightness logic.

As for why on-device training has only been limited to only a few simple use-cases so far, it’s pretty clear. Besides the obvious compute, battery, and power constraints on smartphones, there aren’t many training techniques or algorithms designed for this purpose.

While that unfortunate reality will not change overnight, there are several reasons to be optimistic about the next decade of ML on mobile. With tech giants and developers both focused on ways to improve user experience and privacy, on-device training will continue to evolve in new and exciting ways. Maybe we can then finally consider our phones to be smart in every sense of the word.

from Android Authority https://ift.tt/2UYCPMB

No comments